It’s really hard to find a modern website which doesn’t use javascript technology. It just makes it easier to create dynamic and fancy websites. When you want to scrape javascript generated content from a website you will realize that Scrapy or other web scraping libraries cannot run javascript code while scraping. First, you should try to find a way to make the data visible without executing any javascript code. If you can’t you have to use a headless or lightweight browser.

So you come across a website which uses javascript to load data. What do you do? First, you should check the website in your real browser with JS disabled. There’s a good chance that the website is fully loaded and functioning even without JS (like Amazon.com). If you need to enable JS to reach the data you want there’s not much you can do but use a headless or lightweight browser to load data for scraping.

Headless and lightweight browsers Link to heading

Headless browsers are real full-fledged web browsers without a GUI. So that you can drive the browser via an API or command line interface. Popular browsers like mozilla and chrome have their own official web driver. These browsers can load JS so you can use them in your web scraper. One such headless browser is Selenium.

On the other hand, lightweight browsers are not fully functioning browsers. They have only the main features so they can behave like real browsers. They can load JS as well. Splash is a lightweight browser.

When you choose between these two options for your web scraping project you should consider one major factor: hardware resource requirements. As I mentioned headless browsers are real full-featured browser instances working in the background. That’s why they consume system resources like hell which can be a nightmare considering that a simple scraper makes thousands of requests while running. I highly discourage you from using Selenium for web scraping projects.

Instead you should try Splash. It is created to render JS content only. This is exactly what you need for web scraping. This tutorial will be a quick introduction to using Splash and Scrapy together. This tutorial will help you to get started.

Install Splash Link to heading

In order to install Splash you should have Docker already installed. If you haven’t, install it now with pip:

sudo apt install docker.io

Using docker you can install Splash:

sudo docker pull scrapinghub/splash

Now you can test if Splash is installed properly you have to start Splash server every time you want to use it:

sudo docker run -p 8050:8050 scrapinghub/splash

This command will start Splash service on http://localhost:8050

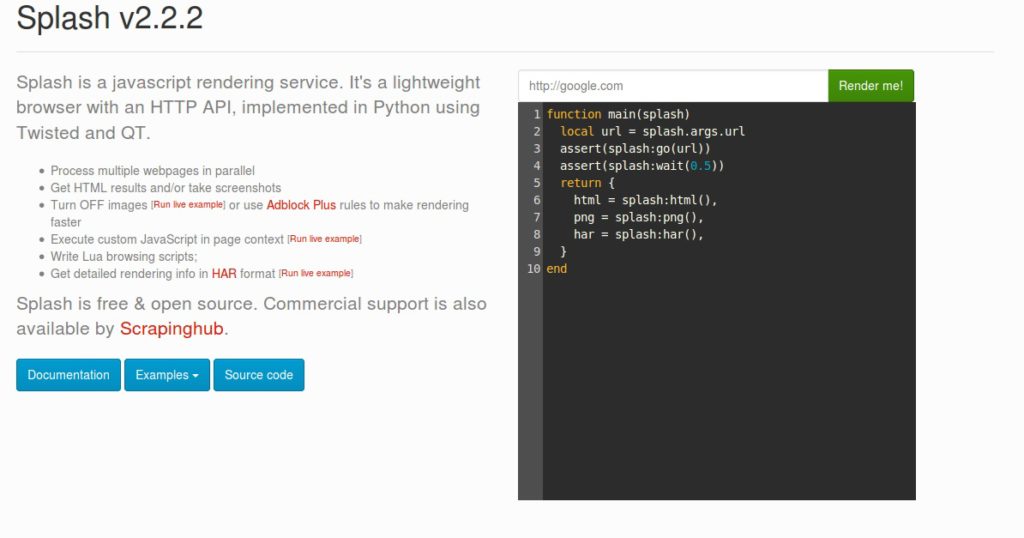

You will see this on the screen:

On the right side of the page you can render a website with Splash and then run a Lua script on it. When using Splash, in order to interact with JS elements(buttons, forms, etc..) you need to write Lua scripts. This tutorial will not delve in Splash scripting but you can learn about it here.

Now it’s time to set up our Scrapy project to work with Splash properly. The easiest way to do it is using scrapy-splash. You can download it with pip:

sudo pip install scrapy-splash

Then go to your scrapy project’s settings.py and set these middlewares:

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

The url of the Splash server(if you’re using Win or OSX this should be the URL of the docker machine):

SPLASH_URL = 'http://localhost:8050'

And finally you need to set these values too:

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

Now you’ve integrated Scrapy and Splash properly. Move on how you can use it in your spider.

SplashRequest Link to heading

In a normal spider you have Request objects which you can use to open URLs. If the page you want to open contains JS generated data you have to use SplashRequest(or SplashFormRequest) to render the page. Here’s a simple example:

class MySpider(scrapy.Spider):

name = "jsscraper"

start_urls = ["http://quotes.toscrape.com/js/"]

def start_requests(self):

for url in self.start_urls:

yield SplashRequest(url=url, callback=self.parse, endpoint='render.html')

def parse(self, response):

for q in response.css("div.quote"):

quote = QuoteItem()

quote["author"] = q.css(".author::text").extract_first()

quote["quote"] = q.css(".text::text").extract_first()

yield quote

SplashRequest renders the URL as html and return the response which you can use in the callback(parse) method.

Have look at what arguments you can give to SplashRequest:

- url: The URL of the page you want to scrape.

- callback: a method that will get the (html) response of the request

- endpoint: it will define what kind of response get. Possible values:

- render.html: It is used by default. Returns the html of the rendered page.

- render.png: Returns a PNG screenshot of the rendered page.

- render.jpeg: Returns a JPEG screenshot of the rendered page.

- render.json: Return information about the rendered page in JSON.

- render.har: Returns information about requests, responses made by SplashRequest.

- execute: This is a special one it executes your custom Lua script or Javascript given as parameter to modify the response page. As follows:

yield SplashRequest(url=url, callback=self.parse, endpoint=‘execute’, args={’lua_source’:your_lua_script, ‘js_source’:your_js_code})

Splash responses Link to heading

The returned response of a SplashRequest or SplashFormRequest can be:

- SplashResponse: binary response such as render.jpeg responses

- SplashTextResponse: render.html responses

- SplashJsonResponse: renderer.json responses

These objects extend scrapy Responses so if you want to scrape from the response page you have to use render.html as endpoint and you’ll be able to use response.css and response.xpath methods as usual.